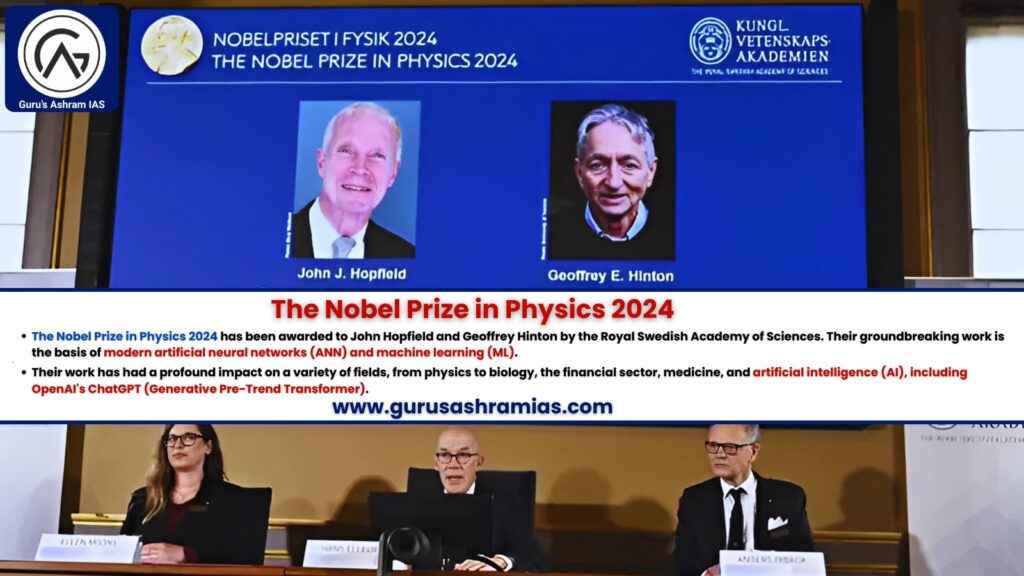

The Nobel Prize in Physics for 2024

- The Nobel Prize in Physics 2024 has been awarded to John Hopfield and Geoffrey Hinton by the Royal Swedish Academy of Sciences. Their groundbreaking work is the basis of modern artificial neural networks (ANN) and machine learning (ML).

- Their work has had a profound impact on a variety of fields, from physics to biology, the financial sector, medicine, and artificial intelligence (AI), including OpenAI’s ChatGPT (Generative Pre-Trend Transformer).

The contribution of John Hopfield:

The Hopfield network:

- John Hopfield is known for creating the Hopfield network, a type of recurrent neural network (RNN) that has been foundational in ANN and AI.

- The Hopfield network, developed in the 1980s, was designed to store simple binary patterns (0 and 1) in a network of artificial nodes (artificial neurons).

- A key feature of this network is associative memory, whereby it is able to retrieve complete information from incomplete or distorted input (similarly the human brain retains memories when triggered by a familiar sensation, such as a smell).

- The Hopfield network is based on Hebbian learning (a concept in neuropsychology where repeated interactions between neurons strengthen their connections).

- Drawing parallels with atomic behavior, Hopfield worked towards pattern recognition and noise reduction by minimizing energy states in this network using statistical physics, which is a major breakthrough in the development of neural networks and artificial intelligence (AI) by mimicking the functions of the biological brain.

Impact:

- Hopfield’s model system is used to solve computational tasks, complete patterns, and improve image processing.

What is the contribution of Geoffrey Hinton:

Restricted Boltzmann machines (RBMs):

- Building on Hopfield’s work, in the 2000s Hinton developed a learning algorithm for restricted Boltzmann machines (RBMs) that enabled deep learning by connecting multiple layers of neurons.

- RBMs were able to learn from examples rather than explicit instructions. This enabled the machine to recognize new patterns based on similarity with previously learned data.

- Unrecognized categories (if they conform to the learning pattern) could be identified by Boltzmann machines.

Applications:

- Hinton’s work has led to breakthroughs in many areas, from healthcare diagnostics to financial modeling and even AI technologies such as chatbots.

Note:

- The 2023 Nobel Prize in Physics was awarded to Pierre Agostini, Ferenc Krausz and Anne L. Huillier for groundbreaking work in the field of experimental physics.

Artificial neural networks (ANN):

- Artificial neural networks (ANNs) are inspired by the structure of the brain, where biological neurons interconnect to perform complex tasks. Artificial neurons (nodes) in the ANN collectively process information, allowing data, similar to brain synapses, to flow through the system.

The general structure of ANN:

Recurrent neural networks (RNN):

- Recurrent neural networks (RNN) are trained on sequential or time series data to build a machine learning (ML) model that can make sequential predictions or present conclusions based on sequential input.

Convolutional neural networks (CNNs):

- Designed for grid-like data (e.g., images), CNNs use three-dimensional data for classification of images and object recognition tasks.

Feedforward Neural Network:

- The simplest architecture, where information flows in one direction from input to output with fully connected layers.

- It is easier than recurrent and convolutional neural networks.

Auto encoders:

- Used for untrained learning, these collect input data, compress it so that only the most important parts remain surplus, and then reconstruct the original data from this compressed version.

Generative Adversarial Network (GAN):

- Generative Adversarial Network (GAN) is a powerful type of neural network used for unsupervised learning. There are two networks: A generator, which creates fake data, and a discriminator, which differentiates between real and fake data.

- Through this adversarial training (a machine learning technique that is helpful in making models more robust), GANs generate realistic, high-quality samples.

- These are versatile AI tools, widely used in image synthesis, style transfer, and text-to-image synthesis, revolutionizing generative modeling.

Machine learning:

- It is a branch of artificial intelligence (AI), which uses data and algorithms to enable computers to learn from experiences and improve their accuracy over time.

Operating System:

- The decision: making process: Algorithms predict or classify data based on input, which can be labeled or unlabeled.

- Error function: This function evaluates the predictions of the model against known examples to assess accuracy.

- The process of model optimization: This model adjusts its weight to improve its predictions until it reaches an acceptable level of accuracy.

Machine learning vs deep learning vs neural networks:

- Hierarchy: AI also includes machine learning. Machine learning involves in-depth learning; This deep learning depends on the neural network.

- Deep learning: A subset of machine learning, which uses neural networks with different layers (deep neural networks) and can process unstructured data without the need for labeled datasets.

- The neural network: A specific type of machine learning model structured in layers (input, invisible, output) that copies how the human brain works.

- The complexity: As AI transitions from neural networks, the complexity and specificity of tasks increases, and deep learning and neural networks emerge as specialized tools within the broader AI framework.